In the rapidly evolving landscape of artificial intelligence and neuromorphic engineering, a groundbreaking innovation is capturing the attention of researchers and industry leaders alike: the development of neuro-morphic vision chips inspired by the human retina. These chips, which leverage pulse neural networks to mimic the biological processes of vision, represent a significant leap forward in creating efficient, low-power, and high-speed visual processing systems. Unlike traditional image sensors that capture frames sequentially and process them through complex algorithms, these retina-inspired chips event-driven approach allows for dynamic, real-time response to visual stimuli, much like the human eye. This technology is not merely an incremental improvement but a paradigm shift, promising to revolutionize fields ranging from autonomous vehicles and robotics to medical imaging and surveillance.

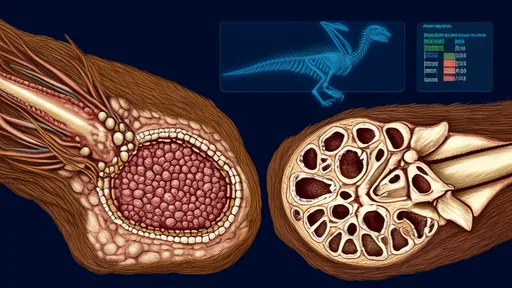

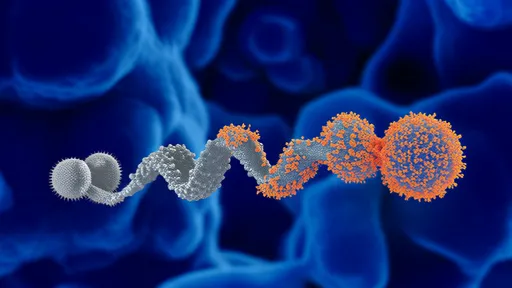

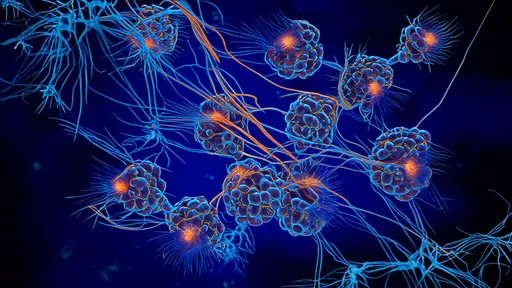

The core of this innovation lies in its biomimetic design, which closely emulates the structure and function of the biological retina. In the human eye, the retina does not passively record entire scenes; instead, it processes visual information through layers of neurons that respond selectively to changes in light, such as edges, motion, or contrasts. This efficient encoding reduces the vast amount of raw visual data to essential features before transmitting signals to the brain. Similarly, neuro-morphic vision chips incorporate pulse neural networks—also known as spiking neural networks—that use discrete, asynchronous pulses (or "spikes") to represent information. Each pixel in these chips operates independently, generating events only when it detects a change in luminance, thereby drastically cutting down on data redundancy and power consumption. This event-driven mechanism stands in stark contrast to conventional vision systems, which process every pixel at a fixed rate, often leading to inefficiencies and delays.

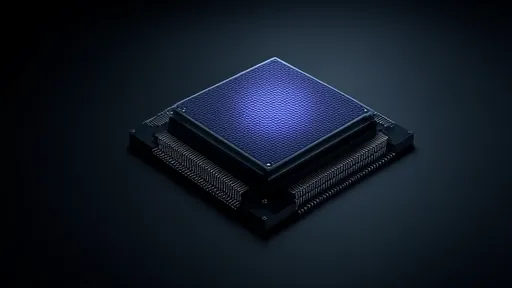

One of the most compelling advantages of these retina-inspired chips is their exceptional energy efficiency. Traditional computer vision systems, reliant on frame-based cameras and von Neumann architectures, suffer from the "memory wall" problem, where data shuttling between processors and memory consumes substantial power and introduces latency. In contrast, neuro-morphic chips integrate sensing and processing at the pixel level, enabling in-sensor computing that minimizes data movement. The pulse neural networks facilitate sparse communication—only active neurons transmit spikes—which aligns perfectly with the event-driven nature of the hardware. As a result, these chips can achieve remarkable performance with power budgets as low as milliwatts, making them ideal for battery-powered applications like mobile devices, drones, or Internet of Things (IoT) sensors. This efficiency does not come at the cost of speed; in fact, the asynchronous processing allows for microsecond-level response times, critical for real-time applications such as collision avoidance in autonomous systems.

Beyond efficiency, the adaptability and robustness of these systems open up new frontiers in machine perception. Pulse neural networks, with their temporal coding and event-driven dynamics, excel at handling dynamic environments where lighting conditions, motion, and occlusions pose challenges for traditional vision algorithms. For instance, in automotive applications, a neuro-morphic vision chip can reliably detect pedestrians or obstacles even in low-light or high-glare scenarios, as it responds primarily to changes rather than absolute intensity. Moreover, the inherent noise tolerance of spiking networks—akin to biological systems—enhances reliability in unpredictable real-world settings. Researchers are also exploring on-chip learning capabilities, where the networks can adapt their parameters based on incoming visual streams, paving the way for lifelong learning systems that improve continuously without external intervention.

The development of these chips is fueled by interdisciplinary advances in materials science, neuroscience, and electrical engineering. Fabricating such devices requires novel memristive or CMOS technologies that can emulate neuronal and synaptic behaviors at scale. Recent prototypes have demonstrated impressive feats, such as recognizing gestures, tracking high-speed objects, and even replicating basic visual reflexes like the optokinetic response. However, challenges remain in scaling up the technology for complex tasks, ensuring manufacturability, and integrating with existing AI frameworks. Despite these hurdles, the progress is undeniable, with several startups and academic institutions pushing the boundaries of what's possible. Collaborations between neuroscientists and engineers are crucial, as deeper insights into retinal circuitry inspire more refined and powerful designs.

Looking ahead, the implications of neuro-morphic vision chips extend far beyond current applications. In healthcare, they could lead to advanced prosthetics that restore vision with unprecedented naturalism, or to portable diagnostic tools that analyze medical images in real time. In smart cities, they might enable pervasive, privacy-aware surveillance that processes only relevant events without storing raw video. Furthermore, as artificial intelligence strives toward greater autonomy and efficiency, these chips offer a tangible path to embodied AI—systems that perceive and act in the physical world with human-like grace and economy. While still in its relative infancy, this technology is poised to disrupt multiple industries, embodying the convergence of biological inspiration and engineering prowess.

In conclusion, the emergence of neuro-morphic vision chips based on retinal principles and pulse neural networks marks a transformative moment in visual computing. By harnessing the brain's efficient design, these chips address critical limitations of conventional systems, offering unparalleled energy efficiency, speed, and adaptability. As research accelerates and commercialization efforts gain momentum, we stand on the cusp of a new era where machines see and interpret the world not as a series of snapshots, but as a flowing, dynamic tapestry of events—much as we do. This synergy between biology and technology not only advances engineering goals but also deepens our understanding of perception itself, blurring the lines between artificial and natural intelligence.

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025

By /Aug 25, 2025